A leadoff hitter who is too big to steal second base. A first baseman who can’t pitch due to elbow surgery. A starting pitcher with clubfoot, a congenital disfiguration of the feet. A batter in his forties, rejected by all other teams for his age but somehow ended up as seventh batter. This is probably the most dysfunctional baseball team anyone can think of.

Is this team straight out of a comedy movie? No, this is the Oakland Athletics team roster from 2000-2003. During this period, the A’s made the playoffs for four consecutive years, which is quite a feat. What makes their success even more amazing is that the team’s payroll was less than a third of the New York Yankees, which was at the time the richest team in the MLB. The A’s lackluster roster and the team’s astonishing ROI remains one of the biggest surprises of MLB’s 140-year history. What was the A’s secret? The key lies in scientific team management, which broke down conventional wisdom and prejudice.

At the time, MLB had degenerated into a game of finances in which wealthy teams enticed star players and recruited them in droves to raise team capacity. However, Billy Beane, the A’s general manager, had a knack for spotting key elements for success rather than flashy performances.

Billy Beane used data to tear down the wall of conventional wisdom. For the first time for a major league team, Beane hired an assistant who studied statistics and economics, and had nothing to do with baseball. They used data to identify players’ specific elements that contributed to victory. The research yielded surprising results, many of which contradicted widespread assumptions. They found that patient batters who could wait for good balls performed better than aggressive batters; on-base percentage turned out to be a better indicator of offensive success than batting average. Base stealing and bunts, which were abused in games, turned out to contribute much less to victory than widely assumed. Based on these findings, Billy Beane personally oversaw the recruitment process and was able to gather hidden talent who were inevitably underappreciated in the market to form a great team.

We can apply this story to business management. Management styles that are based on common wisdom or a CEO’s leadership are quickly becoming outdated. This shift in paradigm was brought forth by big data. Prior to big data, there wasn’t sufficient resources to substantiate theories, and core strategies were formed on experience and common beliefs. However, big data is changing everything. The rapid development of IT infrastructure and availability of large databases has brought forth a new era in which companies are hard pressed to find innovative ways to utilize immense amounts of data at their disposal. Indeed, data has opened a new age of business administration strategies. There are already many cases of innovative businesses reaping success thanks to scientific decisions based on data analysis and mathematical algorithms. Big data is a new paradigm in itself.

Technicians coined the term big data as they sought to derive some meaning from the data, which underwent explosive growth with the development of IT tools and technology. It was a technical term used by companies that provided Internet search engines such as Yahoo and Google, as well as IT businesses that provided big data infrastructure, which had to store, process and analyze unprecedented amounts of data. Big data may have been a technical concept, but since 2011, it has become an innovative paradigm that affects not only technology but society, culture, politics and all other aspects of life. How did this technical term become such an innovative paradigm changer?

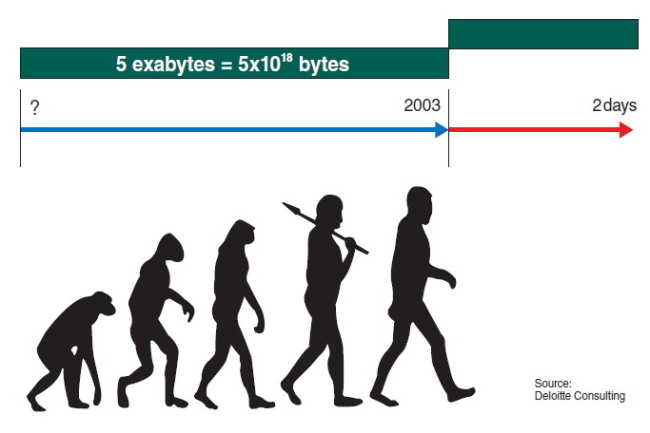

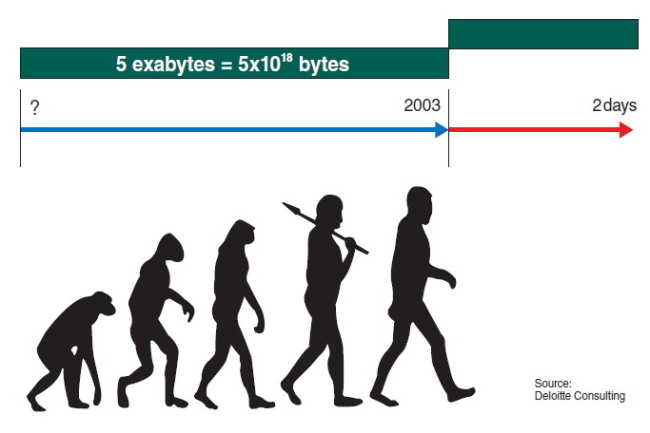

As the picture indicates, mankind has only produced 5 exabytes of information between its birth and 2003, which is only a decade ago. But after 2003, it only took two days to produce the same amount of data. The amount of data that is newly created and accumulated is incomparably larger than before. In terms of data amount, it is no exaggeration to say we now live in a new world of digital civilization.

Due to advancements in IT, the increase in personal mobile devices such as smartphones, and the creation of social media such as Twitter and Facebook, mankind is producing more data than it ever did. But only a few years ago, big data was jargon used exclusively among scholars and the distinction between conventional data, and big data was made only in terms of capacity, so that a petabyte or zettabyte of data automatically qualified as big data. Also, massive amounts of data that could not be accessed or saved using a conventional database were referred to as big data. Not even large business had the technical capacity to store such big data, and even if they could store it, they did not have the capacity to draw meaningful conclusions using the data. Therefore, data was erased as soon as it was created. In those days, analyzing such large amounts of data was thought to be a privilege that could only be enjoyed by companies with an exceptional talent pool, such as Google.

However, we are seeing a very different trend today First of all, companies now understand the value of data, and with the spread of technology and tools that enable nonexperts to store and analyze data, it has become extremely important to collect and analyze large amounts of data.

As the price of hardware declined over the past few years and analysis tools grew more advanced, main users of data expanded from professional data management companies to companies from all sectors of business. Logistics companies began to analyze their clients’ order data, which they only stored until now. Credit card companies began to pay closer attention to their clients’ consumption patterns. Transportation and logistics companies realized that there was quite a business opportunity in logistics channels and tourism routes. Advertising companies began to dissect their target’s desires to create a more sophisticated advertising strategy, and came to produce advertising effects that would have been unimaginable in the past.

When we talk about big data, it is impossible not to mention analytics. Big data analytics is different from conventional business analysis in that it uses sophisticated algorithms and mathematical optimization models to break down complex calculations real-time and produce results. Only a few years ago, breaking down such large amounts of data and using it to make real-time decisions was something only professional data management companies such as Google could do. However, the progress in computing capacity and the introduction of software that uses analytics provided the ground for any company, not only a few data management companies, to utilize highly developed mathematical tools to analyze data and make real-time decisions.

In the traditional paradigm of data, each type of data is produced by its respective source, and all sorts of data are tangled together. On the other hand, in the big data era, diverse types of data can be freely linked as required, and conversely, linked data can be separated for detailed analysis. Data is no longer left alone in its original form in storage; rather, it is now freely linked and separated as needed.

In addition, a results-focused approach is critical to big data analysis. In the past, there wasn’t a sufficient amount of data to draw an accurate result. Therefore, data analysis was conducted in such a way that the goal was to collect any data available and forecast how it could be used. In other words, experts would collect and analyze data, deduce its meaning and report on what it means. Therefore, data analysis was largely limited to past data analysis. But in the big data era, the focus of data analysis is on results. That is, one can set very specific goals (how to increase the sales of a given product, how to raise the number of patrons at a given store), determine what decisions must be made is necessary to meet this goal, and then collect data. Unlike traditional analyses, big data requires data collection at the very last stage of the analysis. This is based on the premise that one can always attain a sufficient amount of the information they need.

Big data is not a simple leap in technology or an IT trend. In the history of mankind, the fact that there is a lack of necessary information and data has always been the premise for decision making. Even if there were enough data, it would be impossible to amass the data real-time and incorporate it into the decision-making process.

However, the IT sector has made huge strides in computing capacity, as illustrated by the widespread use of smartphones, and this has helped to overcome these limitations. Now, we can always use smartphones to easily collect the data we need and use the information to make decisions. This means that we live in a new reality; in the past, the premise was that a dearth of information was required to make good decisions.

In today’s reality, we can always get the data we need for immediate decision making. Customers can already use their smartphones to find out which store offers the best prices when they are shopping. Global fashion brand Zara has an algorithm that uses real-time sales and inventory data to decide which product they should put in which store.

Now, the method of decision-making itself is changing. This is the new paradigm brought forth by big data that should be used to establish a new business structure that fits the current times.

|

Jang Young-jae |

By Jang Young-jae, KAIST professor of industrial and systems engineering